Local AI Inference Server

A local AI application development server and network in my lab with custom configured hardware and software stack.

Key Impact

Technologies Used

Project Brief

A high-performance, custom-built local computing environment housing powerful GPUs and optimized software stacks for rapid AI inference. Created to explore and master the end-to-end AI application development process and hardware optimizations.

Challenges

Solution

When building this machine, I envisioned having the ability to run local AI models, agents, and workflows in connection with cloud hosted solutions to enable myself to fully develop applications from the ground up. But the real purpose was to take advantage of the this opportunity to grant myself the experience to grasp the knowledge and experience to lead future AI enabled projects such as:

1. Ground up hardware system configuration and understanding the bottlenecks, nuances, and trends which enable these applications to operate effectively.

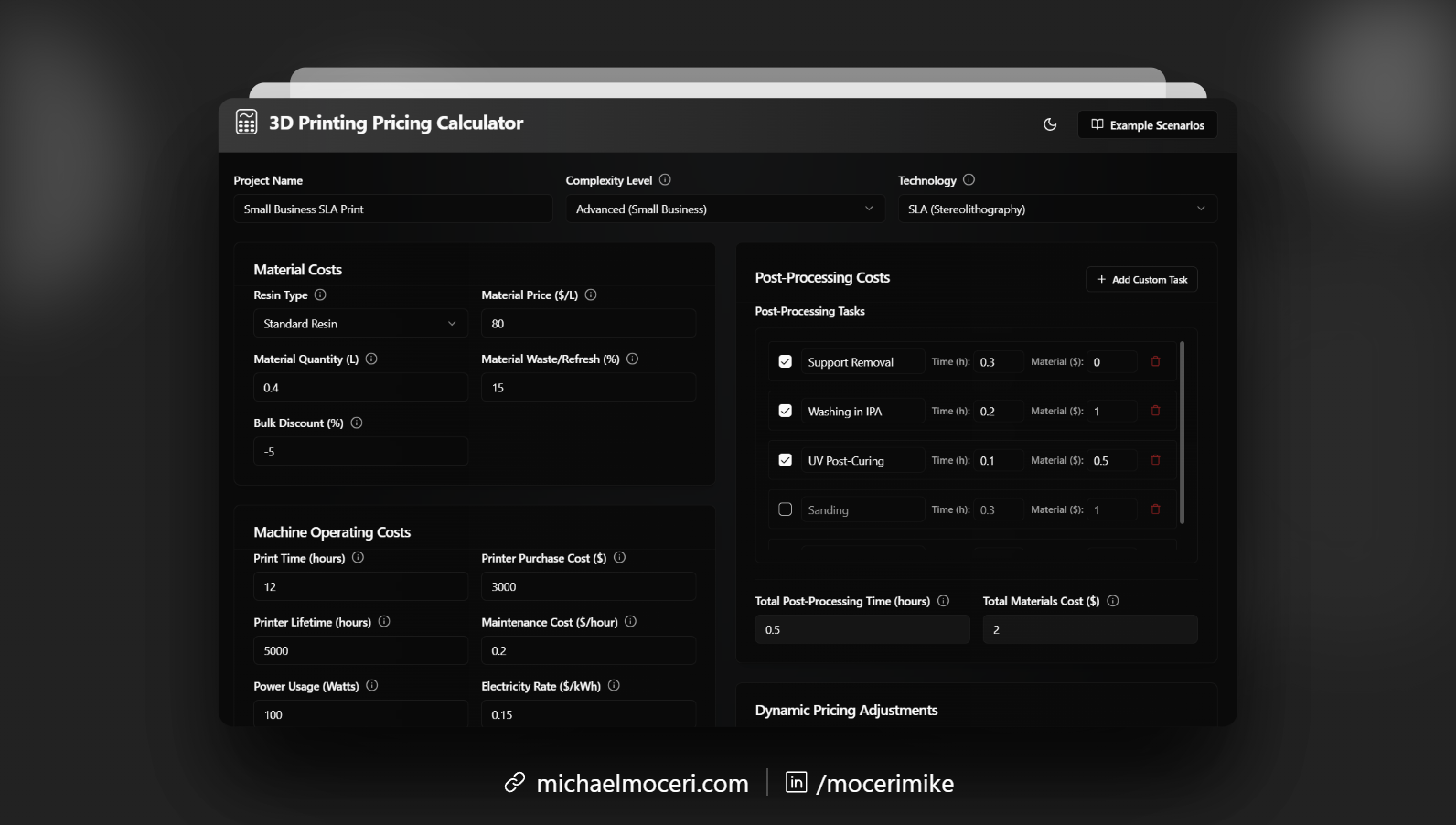

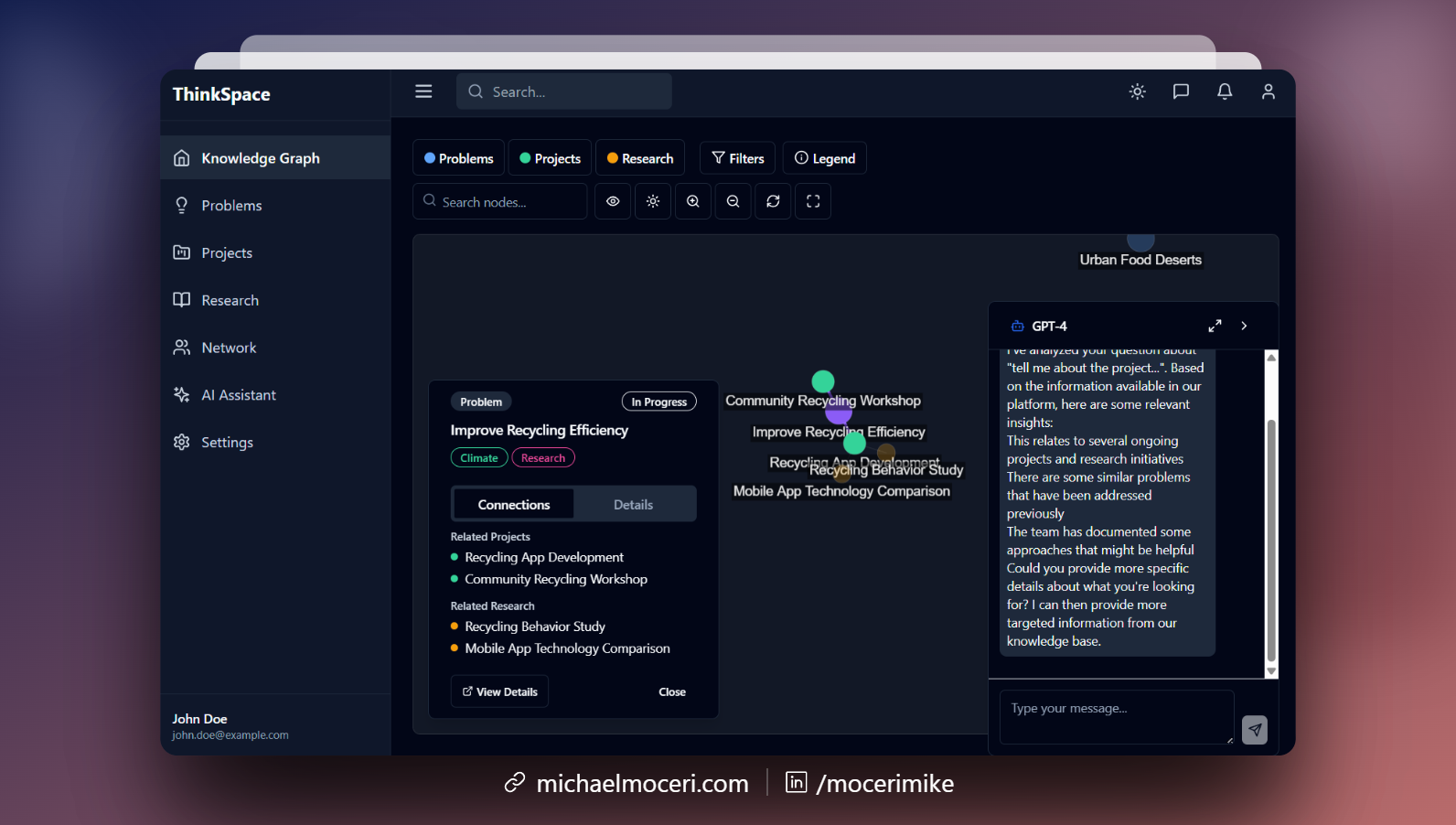

2. Development environment configuration through deep research and experimentation with various tools such as Ollama, Open WebUI, RAG pipelines, N8N, Bolt.diy, and more.

3. Various AI model frameworks experimentation such as custom distilled llama 3.3 CoT thinking models, vision models, embedding models, image models such as Flux, and embedding them into application development pipelines, tools, apps, open source project projects, and more.

Future Development

Upgrade and expand GPU array with L40s or GDDR7 equivalent for larger models, context, and faster inference.

Categories

Featured Projects

Related Projects

Crypto Datacenter Farm

Building a compact, but large capacity, crypto mining "farm".

$100MM Art Foundation

Strategic development initiative to transform a historic landmark into an innovation center for contemporary multi-media and interactive arts.

Playboy Magazine Cover

Collaborative project with one of the most recognizable brands in the world.

AI, Advanced Manufacturing, Strategy, and Entrepreneurship

© 2025 Michael Moceri. All rights reserved.